Having watched Scott Hanselman’s excellent introduction to setting up your first oData service I thought that it would be really easy to get underway quickly in creating an oData service for Microsoft’s Data Market.

Basically, you create a database, you create an ADO.Net entity data model, you create a WCF data Service and tie it to the entity data model and stick all that in an Asp.Net web site and it works.

But for me it didn’t, and I’ve spent ages working out why.

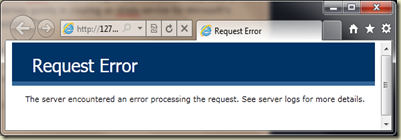

This is what I got:

The first thing you think of is to set a breakpoint, but the error, wherever it is, is deep inside Microsoft code in the constructor for the entity model. Also I’m running this on a development system using Cassini, so there are no logs.

How then do you find the error?

After a lot of digging around I found this post and added the attribute below to the data service class:

namespace Services.Web

{

[System.ServiceModel.ServiceBehavior(IncludeExceptionDetailInFaults = true)]

public class ChaosKit : DataService<ChaosKitEntities>

{

// This method is called only once to initialize service-wide policies.

public static void InitializeService(DataServiceConfiguration config)

{

// TODO: set rules to indicate which entity sets and service operations are visible, updatable, etc.

// Examples:

// config.SetEntitySetAccessRule("MyEntityset", EntitySetRights.AllRead);

// config.SetServiceOperationAccessRule("MyServiceOperation", ServiceOperationRights.All);

config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;

}

}

}

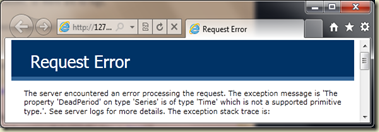

Now I get:

So, we discover that WCF data services doesn’t like the data type “Time”. Finally!

I hope this helps you with the same kinds of problems.